Best Practice: Handling Null Results

- BioSource Faculty

- Nov 3, 2025

- 13 min read

Updated: Nov 3, 2025

We have based our Best Practice series on Dr. Beth Morling's Research Methods in Psychology (5th ed.). We encourage you to purchase it for your bookshelf. If you teach research methods, consider adopting this best-of-class text for your classes.

Dr. Beth Morling is a distinguished Fulbright scholar and was honored as the 2014 Professor of the Year by the Carnegie Foundation for the Advancement of Teaching.

With more than two decades of experience as a researcher and professor of research methods, she is an internationally recognized expert and a passionate advocate for the Research Methods course. Morling's primary objective is to empower students to become discerning critical thinkers, capable of evaluating research and claims presented in the media.

In this post, we will explore a pivotal question addressed by Chapter 11: "How should we handle null results?"

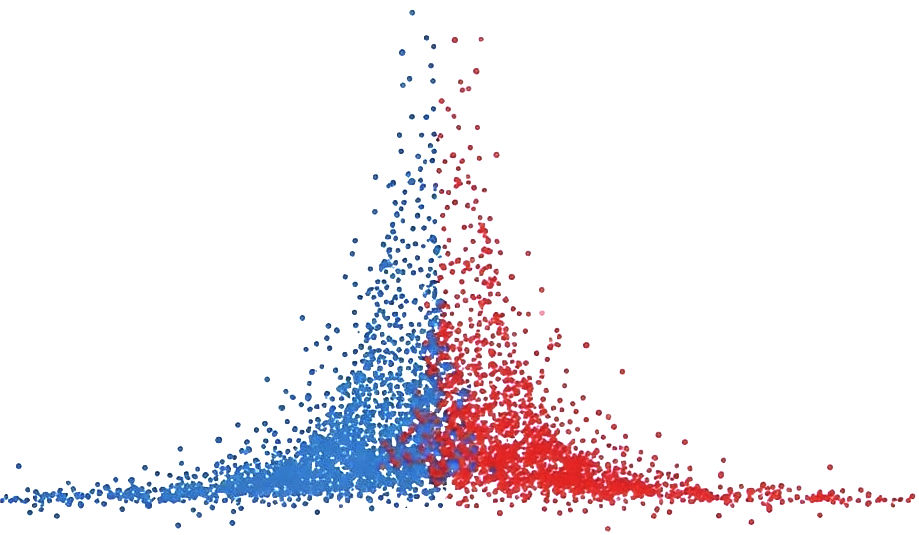

Null results—defined as outcomes where no statistically significant difference is observed between experimental groups—are often misunderstood. Statistical significance refers to the probability that the observed results are not due to random chance, typically determined by a p-value below a threshold such as 0.05. When results fail to meet this criterion, they are deemed “non-significant” or “null.” The graphic below shows overlapping scores from two distributions seen in null outcomes.

However, a null result does not always mean that the independent variable (the factor manipulated by the researcher) does not affect the dependent variable (the outcome being measured).

Rather, a null result raises critical methodological questions: Was the manipulation potent enough? Was the measurement sensitive and accurate? Was the sample large enough? Did the study have adequate statistical power—the likelihood of detecting an effect if one truly exists (Cohen, 1992)?

The presence of a null result should not be taken as the endpoint of inquiry, but rather as an invitation to reevaluate the theoretical assumptions, measurement tools, and experimental design. For example, Frattaroli (2006) found mixed results in expressive writing studies. A study showing no effect of journaling on test anxiety may not disprove the emotion-regulation theory but could instead reflect issues such as short intervention length, superficial prompts, or participant disengagement. Thus, null results, when carefully examined, can yield vital information for theory refinement and methodological advancement.

Insufficient Between-Groups Differences

One common cause of null results is insufficient contrast between the conditions being compared. In an experiment, conditions must be distinct enough to produce a meaningful change in the dependent variable.

When the manipulation of the independent variable is too subtle—a situation referred to as a weak manipulation—the psychological process of interest may not be triggered.

For instance, attempting to induce stress by showing a slightly suspenseful clip may not sufficiently activate the participants’ hypothalamic-pituitary-adrenal (HPA) axis, leaving cortisol levels or self-reported anxiety unchanged (Aronson et al., 2019).

Another factor contributing to null results is the use of insensitive measures, which are tools or scales that cannot detect subtle but important differences in outcomes. Imagine testing whether a gratitude journal improves mood using a single yes/no question like "Did you feel happy today?" This binary measure lacks the granularity required to detect moderate improvements. A more valid and sensitive instrument would be the Positive and Negative Affect Schedule (PANAS; Watson et al., 1988), which captures mood variations across multiple dimensions on a continuous scale.

Instruments may also suffer from ceiling effects, where most scores cluster at the high end, or floor effects, where scores cluster at the low end. For example, if a math test is too easy, most students will achieve near-perfect scores, preventing the detection of any improvements from a tutoring program. The issue is not the absence of learning but the inability of the measure to capture that learning. Pilot testing the task can help ensure that scores are appropriately distributed to detect differences.

To address these issues, researchers should implement manipulation checks, which are auxiliary measures included in the study to confirm that the manipulation had the intended psychological effect (Hauser et al., 2018). If participants in a “stress” condition do not report feeling more stressed than controls, then any null finding on downstream variables is likely due to the failed manipulation, not the absence of an effect.

Excessive Within-Groups Variability

Even with a robust manipulation and sensitive measurement, a study can yield null results if within-group variability—differences among participants in the same condition—is too high. High variability increases the standard error, which is a statistical estimate of the spread of sample means. This, in turn, widens confidence intervals and makes it harder to detect significant differences between group means.

One major contributor to within-group variability is measurement error, which arises when the instruments used are unreliable or inconsistently applied. For example, a poorly designed survey on social anxiety might contain ambiguous questions or inconsistent scales, introducing random noise into the results. Reliable tools are those that produce consistent results under consistent conditions, and they can be evaluated using metrics like Cronbach’s alpha or test-retest reliability.

Another source of variability is individual differences—stable traits like personality, intelligence, and baseline motivation that vary naturally among people. If a study on sleep deprivation and cognitive performance includes both morning and evening chronotypes without accounting for these differences, the variance introduced may swamp the effect of the sleep manipulation.

A third factor is situation noise, which refers to uncontrolled environmental influences such as lighting, noise, or experimenter demeanor. Suppose participants complete a cognitive task under differing conditions—some in a quiet lab, others in a busy hallway. These contextual inconsistencies introduce error variance that can obscure real effects.

To reduce within-group variability, researchers can adopt within-subjects designs, where each participant serves as their own control. This design eliminates between-person variability and is especially useful when individual differences are expected to be large.

Other strategies include standardizing procedures, carefully training experimenters, and increasing the sample size, which reduces the standard error and improves the reliability of group comparisons.

The Importance of Power and Precision

A study’s statistical power—the probability of correctly rejecting a false null hypothesis—depends on four main factors: the size of the effect, the sample size, the chosen significance level (alpha), and the variability in the data. When power is low, the study may fail to detect an effect even when one exists, leading to a Type II error (Cohen, 1988).

Power can be estimated using power analysis, a statistical technique that determines the minimum number of participants needed to detect an effect of a given size with a specified level of confidence. This is typically done before data collection and is crucial for avoiding underpowered studies that cannot yield meaningful results.

Precision, on the other hand, refers to how accurately a study estimates the true effect size. High precision is indicated by narrow confidence intervals, which suggest that the estimate is stable and less influenced by random error. Precision improves with larger sample sizes, more reliable measures, and better control of confounding variables.

As demonstrated by Button et al. (2013), underpowered studies tend to produce inflated effect sizes when significant effects are found and are more likely to yield null results when effects are real but too small to detect with limited data. This pattern contributes to the replication crisis—the failure to reproduce findings across studies—which erodes confidence in psychological research.

Thus, building power and precision into study design is not merely a technical requirement but a foundational aspect of credible science. Researchers should report not only p-values but also effect sizes and confidence intervals to give a fuller picture of their results.

When a Null Result is Theoretically Meaningful

Not all null results signal a problem with the study design. In some cases, they offer strong theoretical insights. A theoretically meaningful null result occurs when a well-powered, well-controlled study fails to confirm a hypothesis derived from theory. Such outcomes challenge assumptions and refine models.

For example, stereotype threat theory posits that negative stereotypes can impair academic performance among marginalized groups. If repeated studies, all with high power and validated measures, fail to replicate this effect under specific conditions—say, in private rather than public testing environments—it may suggest that the theory needs boundary conditions (Stoet & Geary, 2012).

Null results can also distinguish between competing explanations. In placebo-controlled drug trials, for instance, if neither the active treatment nor the placebo group shows improvement, this suggests that neither the treatment’s pharmacological ingredients nor psychological expectations are effective. Conversely, if both improve equally, the effect may be driven by expectancy, not the drug itself.

For a null result to be theoretically informative, the study must possess strong construct validity, meaning that the operational definitions and measures accurately reflect the underlying theoretical constructs. If the manipulation was weak or the measure insensitive, a null result tells us little. But if both are strong, the absence of an effect suggests that the theory’s predictions may be incorrect or incomplete.

Transparency and Publication of Null Results

Publishing null results is vital to the integrity and progress of psychological science. However, the field has long suffered from publication bias, the tendency for journals to favor studies with statistically significant findings. This leads to the so-called file drawer problem, where null results are never published, skewing the evidence base (Rosenthal, 1979).

To combat this, researchers are increasingly encouraged to preregister their studies—publicly declaring hypotheses, methods, and planned analyses before data collection. This practice reduces researcher degrees of freedom, such as selectively reporting outcomes or altering hypotheses after seeing the data, thereby increasing the credibility of both significant and null results (Nosek et al., 2018).

Registered Reports, a publication format in which the study’s rationale and methods are peer-reviewed before data collection, offer another solution. If accepted, the study is published regardless of outcome, provided the methods are followed. This format emphasizes methodological rigor over results.

Null results, when transparently reported, contribute to meta-analyses and systematic reviews, allowing more accurate estimation of effect sizes and informing policy and practice. They also prevent resource waste by signaling which hypotheses or interventions may not be worth pursuing further.

Summary

In this post, we’ve taken a deep dive into how people form beliefs and why psychological scientists rely on research-based conclusions instead of intuition, personal experience, or authority figures. While it’s natural to trust our experiences or defer to someone who sounds confident, this post showed that those sources of information often lead us astray. Experiences Handling null results with intellectual honesty and methodological rigor is essential for advancing psychological science. A null result—defined as the failure to observe a statistically significant difference between experimental conditions—should not be interpreted as conclusive evidence that the independent variable had no effect on the dependent variable. Instead, it should prompt researchers to interrogate the study’s design, from the strength of its manipulation to the sensitivity of its measures and the adequacy of its sample size and statistical power.

Null results may stem from insufficient differences between groups, particularly when manipulations are weak or measurement tools are too blunt to detect subtle shifts. Instruments may suffer from ceiling or floor effects, restricting variability and hiding meaningful change. Manipulation checks are vital in confirming whether the psychological state of interest was effectively altered. Additionally, high within-group variability, caused by measurement error, individual differences, or situational noise, can inflate error variance and obscure true effects.

A study’s statistical power and precision—terms that reflect the ability to detect and estimate true effects—are critical in interpreting null results. Low-powered studies are vulnerable to Type II errors and contribute to the broader replication crisis in science. When null effects are produced by methodologically rigorous and well-powered studies, they can offer theoretical insight by ruling out expected effects or clarifying boundary conditions. Transparent reporting practices, including preregistration and Registered Reports, are crucial in ensuring that null results are published and contribute to the cumulative scientific record.

In conclusion, null results are not the absence of discovery but an essential part of the research process. They challenge assumptions, refine hypotheses, and signal when interventions need reassessment. A mature science must embrace null results, interpret them cautiously, and report them transparently.

Key Takeaways

Null results are informative, not failures: A null result does not necessarily mean the independent variable had no effect. Instead, it highlights the need to examine design, measurement, and power to interpret what the result really means.

Insufficient manipulations and insensitive measures often underlie null effects: When manipulations are too weak or measurement tools lack granularity, true effects may go undetected, leading to misleading null outcomes.

Within-group variability can obscure effects: High variability due to measurement error, individual differences, or situation noise can mask actual differences between experimental conditions.

Power and precision are critical for interpretation: Low-powered studies are prone to Type II errors. Conducting power analyses and using reliable tools enhances the credibility of both significant and null results.

Transparent reporting strengthens scientific integrity: Publishing well-designed studies with null results, especially through preregistration and Registered Reports, combats publication bias and supports cumulative theory development.

Glossary

boundary conditions: the specific conditions or contexts under which a theory or effect is expected to apply.

ceiling effects: measurement limitations that occur when most participants score near the top of a scale, limiting the ability to detect further improvement.

confidence intervals: a range of values derived from sample data within which the true population parameter is likely to fall, often expressed at a 95% confidence level.

construct validity: the extent to which a measure or manipulation accurately reflects the theoretical construct it intends to represent.

Cronbach’s alpha: a statistic used to measure the internal consistency or reliability of a scale; values closer to 1.0 indicate greater reliability.

degrees of freedom: the number of independent values or quantities that can vary in a statistical calculation without violating any given constraints. In hypothesis testing and estimation, degrees of freedom often refer to the number of values in the final calculation of a statistic that are free to vary.

dependent variable: the outcome in an experiment that researchers measure to determine the effect of the independent variable.

error variance: variability in the data that cannot be explained by the independent variable and is attributable to measurement error, individual differences, or situational factors.

file drawer problem: the tendency for null or non-significant results to go unpublished, leading to biased representations of evidence in the literature.

floor effects: measurement limitations that occur when most participants score near the bottom of a scale, limiting the ability to detect decreases in scores.

granularity: the level of detail or resolution offered by a measurement instrument; greater granularity allows more precise distinctions between values.

independent variable: the variable in an experiment that is manipulated by the researcher to examine its causal effect on the dependent variable.

individual differences: naturally occurring variability among participants in traits such as personality, motivation, or cognitive ability that can affect experimental outcomes.

insensitive measures: assessment tools that lack the resolution or range needed to detect meaningful differences between groups.

low-powered studies: studies that have a low probability of detecting an effect, often due to small sample sizes, leading to increased risk of Type II errors.

manipulation checks: secondary measures used in experiments to verify that the independent variable successfully induced the intended psychological change.

measurement error: variability in scores caused by inconsistent or inaccurate measurement, rather than by actual differences in the construct being measured.

meta-analyses: statistical procedures that combine the results of multiple studies to estimate the overall effect size and identify patterns across research findings.

null result: an outcome in which no statistically significant difference is observed between experimental conditions.

operational definitions: specific procedures used to define and measure a variable within an experiment.

placebo-controlled trials: experimental studies in which a treatment group is compared to a placebo group to isolate the effect of the active component.

power analysis: a statistical technique used to determine the minimum sample size required to detect an expected effect with a given level of confidence.

precision: the degree to which an estimate of an effect size is close to the true population value, often reflected in narrow confidence intervals.

preregister: the act of publicly recording a study’s hypotheses, methods, and analysis plan before data collection to reduce bias and increase transparency.

probabilistic: describing conclusions or effects that are likely but not guaranteed, based on the laws of probability; often used to describe findings in behavioral science.

publication bias: the tendency for studies with significant findings to be more likely published than those with null results.

Registered Reports: a journal submission format where peer review occurs before data collection, and acceptance is based on methodological quality rather than outcome.

reliable tools: instruments or measures that yield consistent, repeatable results across time, raters, or testing occasions.

replication crisis: a methodological crisis in science stemming from the difficulty in replicating many published findings, often due to low power, selective reporting, or analytic flexibility.

researcher degrees of freedom: the flexibility researchers have in data collection, analysis, and reporting that can lead to biased or spurious findings if not constrained.

situation noise: uncontrolled environmental or contextual factors that introduce variability into participants’ responses and obscure experimental effects.

standard error: the standard deviation of a sampling distribution, used to estimate the accuracy with which a sample mean approximates the population mean.

statistical power: the probability of correctly rejecting a false null hypothesis, often increased by larger sample sizes, strong manipulations, and reliable measures.

statistically significant: a result that is unlikely to have occurred by chance, typically indicated by a p-value below a pre-specified threshold (e.g., p < 0.05).

stereotype threat theory: a theory suggesting that individuals underperform in situations where they fear confirming negative stereotypes about their social group.

test-retest reliability: a measure of consistency over time; it assesses whether a test produces similar results when administered to the same individuals on multiple occasions.

theoretically meaningful null result: a non-significant outcome that provides useful information about a theory by clarifying its limits or falsifying its predictions.

Type II error: a statistical error that occurs when a study fails to reject a false null hypothesis, erroneously concluding that there is no effect.

within-group variability: variation in responses among individuals within the same experimental condition, which can obscure differences between conditions.

References

Aronson, E., Wilson, T. D., Akert, R. M., & Sommers, S. R. (2019). Social psychology (10th ed.). Pearson.

Button, K. S., Ioannidis, J. P. A., Mokrysz, C., Nosek, B. A., Flint, J., Robinson, E. S. J., & Munafò, M. R. (2013). Power failure: Why small sample size undermines the reliability of neuroscience. Nature Reviews Neuroscience, 14(5), 365–376. https://doi.org/10.1038/nrn3475

Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd ed.). Routledge. https://doi.org/10.4324/9780203771587

Cohen, J. (1992). A power primer. Psychological Bulletin, 112(1), 155–159. https://doi.org/10.1037/0033-2909.112.1.155

Frattaroli, J. (2006). Experimental disclosure and its moderators: A meta-analysis. Psychological Bulletin, 132(6), 823–865. https://doi.org/10.1037/0033-2909.132.6.823

Hauser, D. J., Ellsworth, P. C., & Gonzalez, R. (2018). Are manipulation checks necessary? Frontiers in Psychology, 9, 998. https://doi.org/10.3389/fpsyg.2018.00998

Nosek, B. A., Ebersole, C. R., DeHaven, A. C., & Mellor, D. T. (2018). The preregistration revolution. Proceedings of the National Academy of Sciences, 115(11), 2600–2606. https://doi.org/10.1073/pnas.1708274114

Rosenthal, R. (1979). The file drawer problem and tolerance for null results. Psychological Bulletin, 86(3), 638–641. https://doi.org/10.1037/0033-2909.86.3.638

Stoet, G., & Geary, D. C. (2012). Can stereotype threat explain the gender gap in mathematics performance and achievement? Review of General Psychology, 16(1), 93–102. https://doi.org/10.1037/a0026617

Watson, D., Clark, L. A., & Tellegen, A. (1988). Development and validation of brief measures of positive and negative affect: The PANAS scales. Journal of Personality and Social Psychology, 54(6), 1063–1070. https://doi.org/10.1037/0022-3514.54.6.1063

About the Authors

Zachary Meehan earned his PhD in Clinical Psychology from the University of Delaware and serves as the Clinic Director for the university's Institute for Community Mental Health (ICMH). His clinical research focuses on improving access to high-quality, evidence-based mental health services, bridging gaps between research and practice to benefit underserved communities. Zachary is actively engaged in professional networks, holding membership affiliations with the Association for Behavioral and Cognitive Therapies (ABCT) Dissemination and Implementation Science Special Interest Group (DIS-SIG), the BRIDGE Psychology Network, and the Delaware Project. Zachary joined the staff at Biosource Software to disseminate cutting-edge clinical research to mental health practitioners, furthering his commitment to the accessibility and application of psychological science.

Fred Shaffer earned his PhD in Psychology from Oklahoma State University. He is a biological psychologist and professor of Psychology, as well as a former Department Chair at Truman State University, where he has taught since 1975 and has served as Director of Truman’s Center for Applied Psychophysiology since 1977. In 2008, he received the Walker and Doris Allen Fellowship for Faculty Excellence. In 2013, he received the Truman State University Outstanding Research Mentor of the Year award. In 2019, he received the Association for Applied Psychophysiology and Biofeedback (AAPB) Distinguished Scientist award. He teaches Experimental Psychology every semester and loves Beth Morling's 5th edition.

Support Our Friends

Comments